Google unveils its most awaited and capable AI model “Gemini”, the multimodality AI which the firm aspires to surpass OpenAI’s supreme GPT-4.

It’s the beginning of new era of AI at Google, says CEO Sundar Pichai. And it’s not Bard or PaLM-2 the CEO talks about, but the newly unveiled “Gemini”, which had been in works for years. Pichai introduced Gemini in the I/O developer conference in June 2023 and five months later, the AI model is here for public use, though not completely.

Google promises on Gemini AI, to be the next revolutionary model that will ultimately enhance every product it touches upon. “One of the powerful things about this moment is you can work on one underlying technology and make it better and it immediately flows across our products,” Pichai comments on Gemini.

What is Gemini?

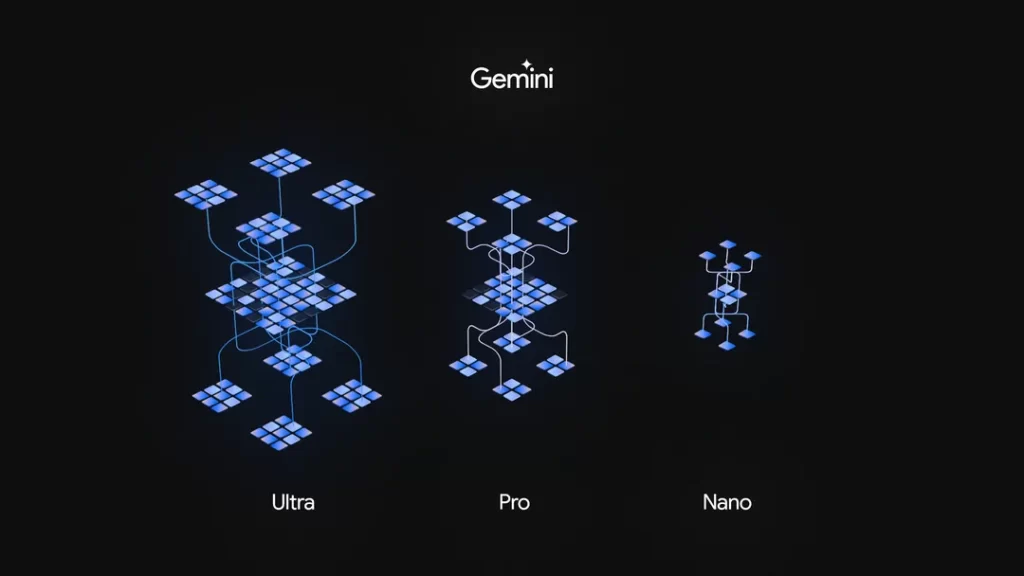

Gemini is the latest artificial general intelligence model of Google, which the firm anticipated for years for launch. Gemini is more than a single AI model, that comes with three flavors or versions – Gemini Nano, Gemini Pro and Gemini Ultra – each one for different potential use cases.

Gemini Nano – Lighter version meant to be run natively and offline on Android devices.

Gemini Pro – Ample version for powering several Google AI services including Bard.

Gemini Ultra – the giant version of Gemini that is most capable and deployed for handling data centers and enterprise applications.

Gemini Nano favors the on-device tasks by introducing new features in Pixel 8 Pro such as Smart Reply in WhatsApp and to a larger extent, the AI is equipped on Tensor 3 processor too.

Gemini Pro on the other hand will be the backbone of every Google AI that’s open for public, starting from Bard to Duet AI, Chrome Ads as well as in SGE (Search Generative Experience) of Google.

Gemini Pro will also be seen in Vertex AI for enterprise customers and Google’s Generative AI Studio developer suite, which will aid developers. Gemini Pro will be accessible from December 13th and the simplest way to access Gemini Pro is via Bard.

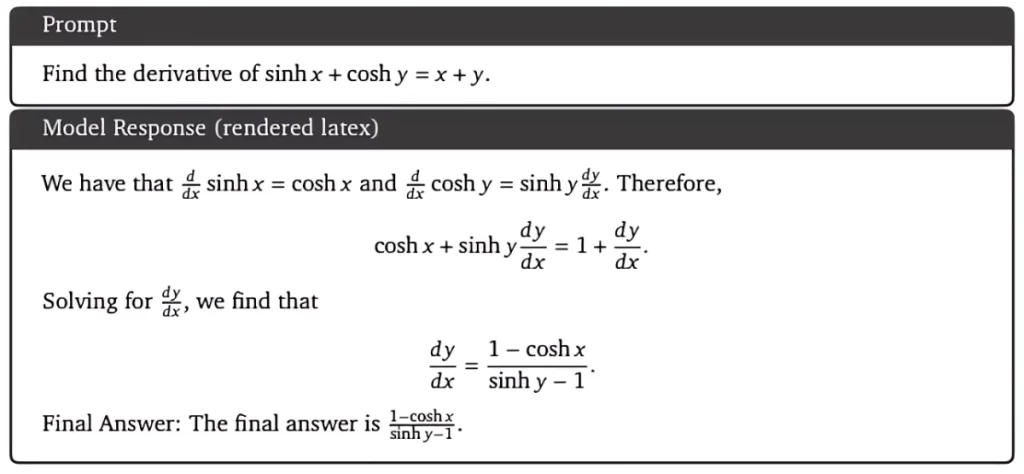

Gemini Ultra, likely as Gemini Pro, is a multimodal AI which can interpret nuanced information specifically in complicated topics such as math and physics.

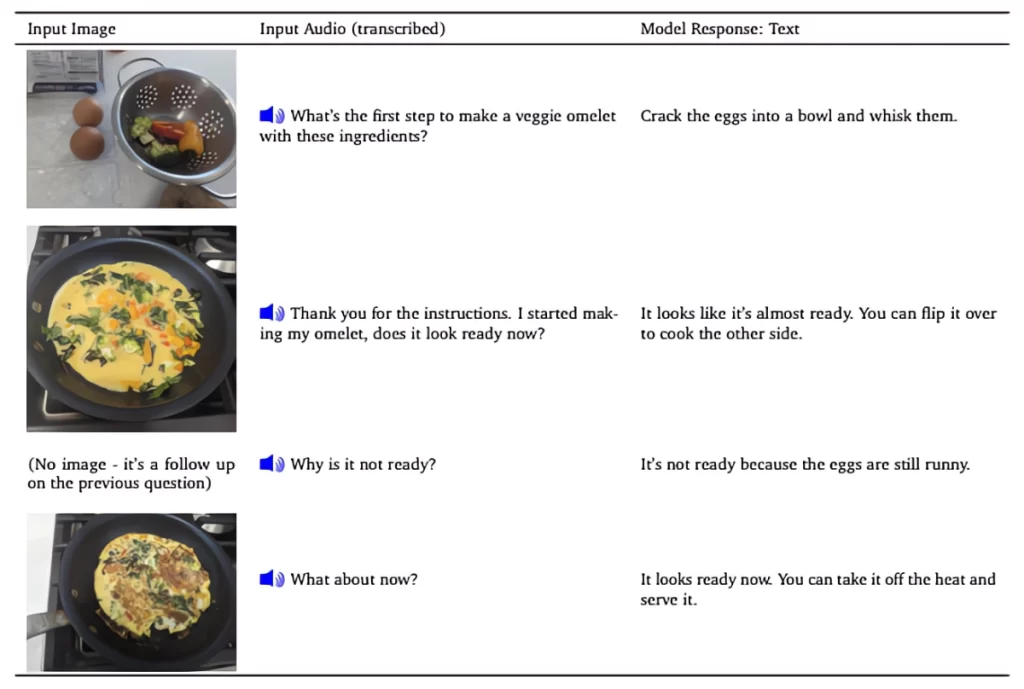

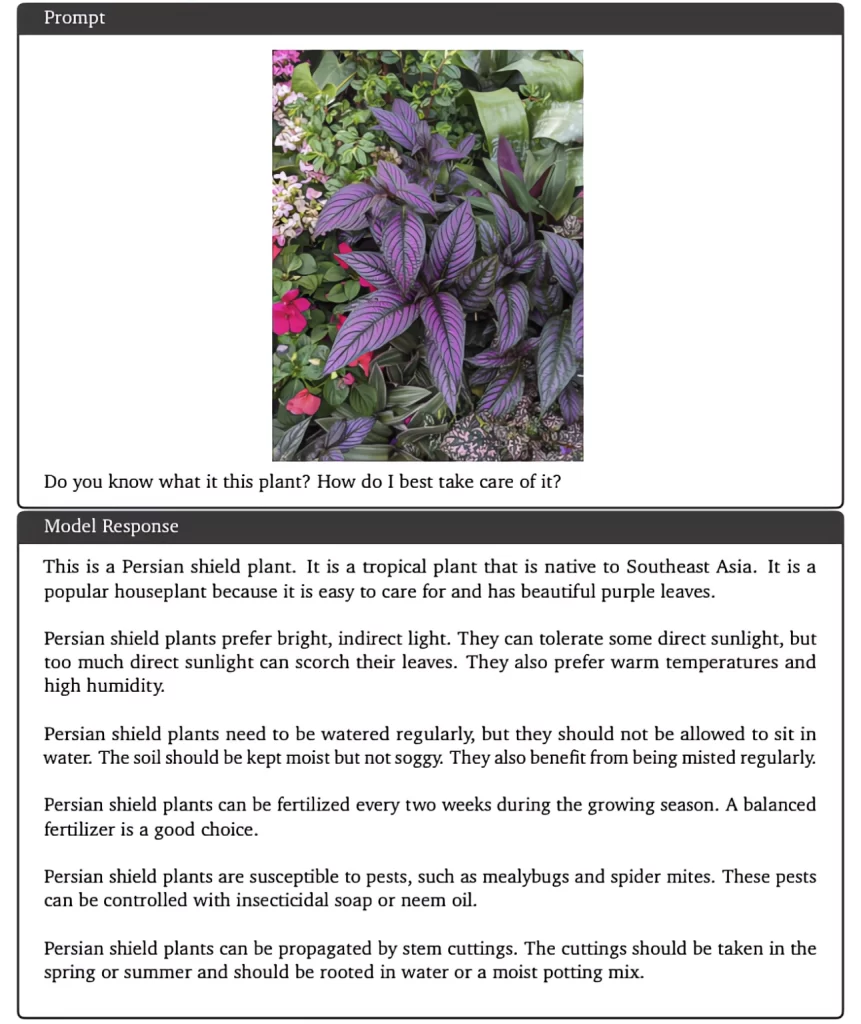

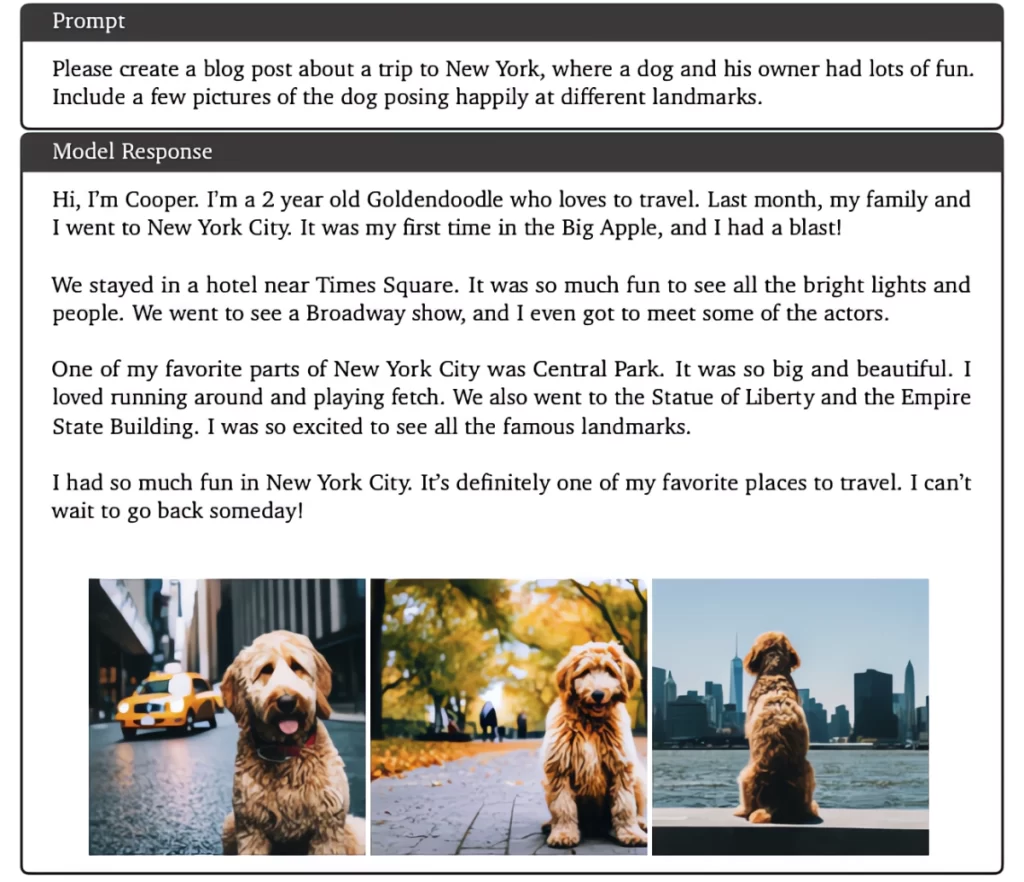

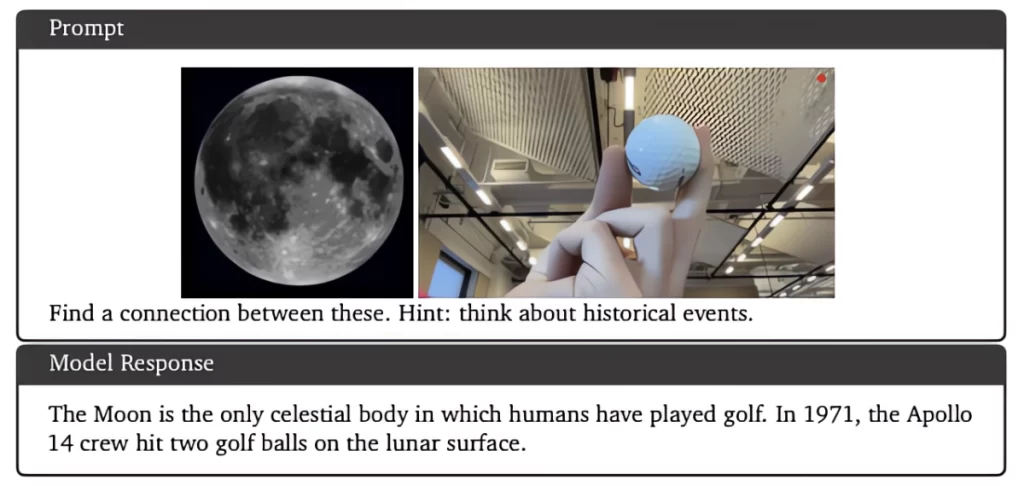

Unlike OpenAI, where it developed separate AI models for specific purposes like ChatGPT for texts, Dall-E for images and Whisper for voice, Google aspires a single model for everything, what they call as multimodality model. Gemini is such a native multimodal AI. Gemini Pro and Ultra are pre-trained and fine-tuned on a large set of codebases, not just in texts, but also in audio, images and videos. The AI can comprehend texts, images, audio and code while answering to a question or query.

Gemini vs ChatGPT

OpenAI’s ChatGPT, due to its performance and trendsetting the AI domain, is actually considered a benchmark model to be compared for other AI models. Google sees ChatGPT as a potential rival of its search-engine business. It also issued code-red when GPT launched. Google then urged AI projects and proliferated several AI models within a year and the ultimate awaited AI project was this Gemini. So now, it’s time for evaluating it – Gemini vs GPT-4.

Google ran 32-well established benchmarks comparing the two models, from broad overall tests like the Massive Multi-task Language Understanding (MMLU) benchmark to one that compares two models’ ability to generate Python code. Gemini leads in 30 out of the 32 benchmark tests.

“I think we’re substantially ahead on 30 out of 32” of those benchmarks, says Demis Hassabis, CEO of Google’s DeepMind. Gemini Ultra scored 90% in MMLU benchmark, which is higher than that of GPT-4’s 86.4%. Gemini is also the first model to outperform human experts on MMLU, the firm claims.

Though Gemini Ultra & GPT-4 performance in many benchmark tests differs vaguely, Google’s AI model takes over OpenAI’s with its multimodality. The unique advantage of Gemini comes from its ability to understand and interact with video and audio. The multimodality aspect of Gemini eligibles it to understand and interpret more senses.

In one such example, Gemini is seen generating code for a video based on the demo visual as input. However, Gemini lags behind in commonsense reasoning test with 87.8% lower than GPT-4’s 95.3%. Another snag is being Gemini’s context window ~24,000 words, which is lower than that of GPT-4 ~ 100,000 words. (Context window refers to the text that an LLM can process at the time the information is generated.)

To put it in a nutshell, though Gemini & GPT-4 seems to go hand-in-hand, the former outperforms latter in some aspects, especially in image, video and audio comprehension and interpretation. While OpenAI approaches different tasks with different models, Google’s Gemini is one huge model for everything.

Is Safety compromised?

When it comes to being responsible, Google is extra cautious since the firm is stabbed multiple times for privacy, security and safety concerns. Indeed, as these AI tools pose a significant threat in case of its exploitation, Google holds stiff to its ‘bold and responsible’ mantra. That’s why Google took an ample 6 years to release its first AI model, though the firm launched its first language modeling transformer back in 2017.

Google says it has worked hard to ensure Gemini’s safety and responsibility, both through internal and external testing and red-teaming. Hassabis acknowledges that one of the risks of launching a state-of-the-art AI system is that it will have issues and attack vectors no one could have predicted. “That’s why you have to release things,” he says, “to see and learn.”

Google is taking the Ultra release particularly slowly; Hassabis compares it to a controlled beta, with a “safer experimentation zone” for Google’s most capable and unrestrained model. Basically, if there’s a marriage-ruining alternate personality inside Gemini, Google is trying to find it before you do.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).

Kindly add ‘The Inner Detail’ to your Google News Feed by following us!