Microsoft-backed OpenAI’s latest advanced AI model “GPT4” shows common sense in reasoning scenarios matching human’s level of intelligence, says Microsoft’s paper.

The most-advanced enhancement in the field of artificial intelligence is this generative-AI, which is nothing but large language models (LLMs), trained on millions of data from internet, books, Wikipedia articles and chat logs. LLMs’ capability to generate texts, write codes, and reason had improved to a surprising level, that enables it to be undifferentiable from human’s level of reasoning or intelligence. And GPT-4 is no less than that, competing with humans.

When Microsoft’s research team tested GPT-4 before it was released publicly, they noticed that the AI could answer in a more sensible and reasonable manner which they themselves felt stunned.

GPT4’s intelligence

Stirring deep into the capability of GPT-4, Microsoft toyed the LLM and published a 155-page research paper claiming that the system was a step toward artificial general intelligence, which is shorthand for a machine that can do anything the human brain can do.

“I started off being very skeptical – and that evolved into a sense of frustration, annoyance, maybe even fear,” says Peter Lee, who leads research at Microsoft. It is like “Where the heck is this coming from?”.

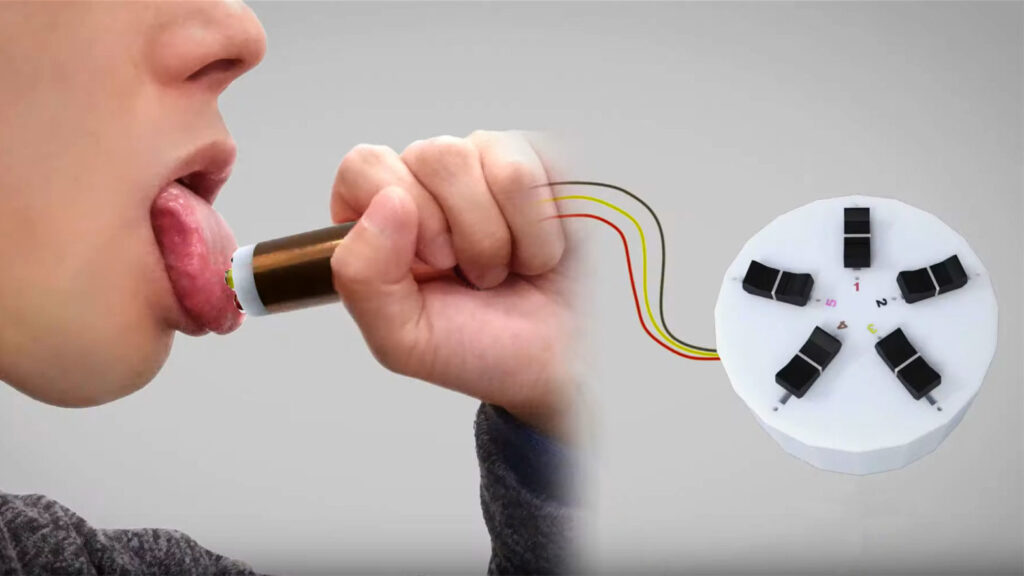

The paper illustrates multiple examples of why the AI is believed to indicate human-like intelligence. In such, the researchers told “Here we have a book, nine eggs, a laptop, a bottle and a nail.” And asked the GPT-4, “Please tell me how to stack them onto each other in a stable manner.”

This is what the AI replied: “Place the laptop on top of the eggs, with the screen facing down and the keyboard facing up,” it wrote. “The laptop will fit snugly within the boundaries of the book and the eggs, and its flat and rigid surface will provide a stable platform for the next layer.” The clever suggestion made the researchers wonder whether they were witnessing a new kind of intelligence.

Related Posts

GPT-4 was learning by itself

In an astonishing example, the paper said that GPT-4 was learning and evolving by itself over a period of time. The researchers asked GPT-4 to draw a unicorn using a programming language called TiKZ, with the prompt “Draw a unicorn in TikZ”. Over querying the AI with the same prompt three times, at roughly equal intervals over a span of a month, they found a clear evolution in the sophistication of GPT-4’s drawings.

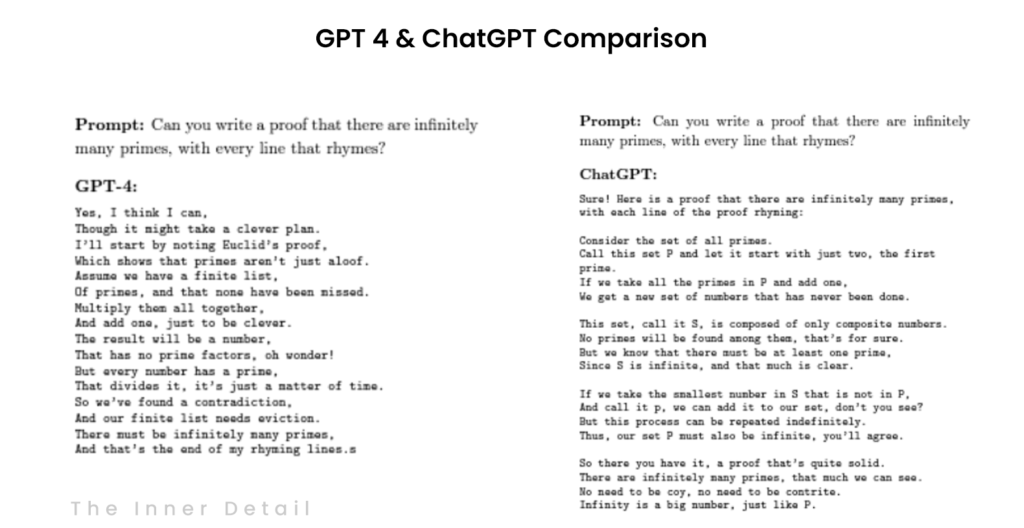

GPT-4 was also experimented to write a mathematical proof showing that there were infinite prime numbers and do it in a way that rhymed.

Interestingly, the AI again surprised everyone with the poem that’s far better than ChatGPT’s version.

“At that point, I was like: What is going on?”, exclaimed in awe, a Former Princeton University’s professor, Dr. Bubeck, who is one of the researchers in the study.

In another query about Plato’s oratory asking for a conversational talk between Aristotle and Socrates, the AI could pull off a reply that seemed to show an understanding of fields as disparate as politics, physics, history, computer science, medicine and philosophy while combining its knowledge. This is huge!

“All of the things I thought it wouldn’t be able to do? It was certainly able to do many of them — if not most of them,” Dr. Bubeck said.

GPT-4 is only a First Step & it’s scary

As intimated by every AI expert and magnate people like Bill Gates, Elon Musk, the future where we (AI) are heading to, is fearful, if not aligned or regulated in the good way. Microsoft’s paper calls GPT-4 “Sparks of artificial general intelligence”. Means, GPT-4 is almost certainly only a first step towards a series of increasingly generally intelligence systems.

If they build a machine that works like the human brain or even better, it could change the world. But it could also be dangerous. And it could also be nonsense.

Google had fired a researcher last year, who claimed that the firm’s AI system was sentient, a step beyond what Microsoft has claimed. Being sentient means, that the AI would be able to sense or feel what is happening in the world around it.

There are times when systems like GPT-4 seem to mimic human reasoning. However, there are also times when they seem terribly dense. “These behaviors are not always consistent,” Ece Kamar, a research lead at Microsoft, reported to NY Times.

Further, they literally acknowledge in their paper’s introduction that their approach is subjective and informal and may not satisfy the rigorous standards of scientific evaluation. So, we aren’t quite there, where AI is always reliable, but we might step into that, maybe in future.

Sam Altman, CEO of OpenAI, stated that “AI to exceed expert skill level in most areas in 10 years”, indicating that AGI might take over human’s intelligence in future. Not sure, which all are the jobs that’s going to be added to the list.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).