Google rolled out its ChatGPT rival – Bard on February 7, 2023 and now incorporates the AI to Maps, Search and Google Translate, enhancing them to be more immersive and interesting, really.

The advanced form of artificial intelligence – Generative AI had seen a surge in application after the commence of OpenAI’s ChatGPT. It drifted upon the perception of AI and its adaptability, extending the tech to proffer on the mankind.

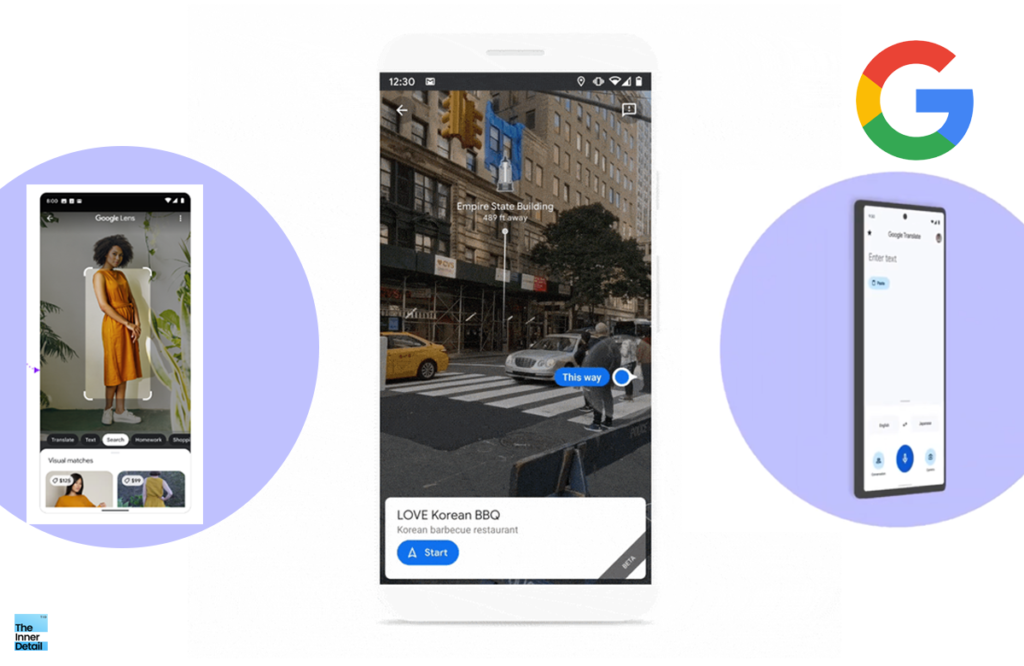

Google after the announcement of Bard, revealed several upgrades to its search, Google Maps and its translate feature deploying AI and augmented reality (AR) to provide expanded answers and live search features that can instantaneously identify objects and locations around a user.

Immersive & Live View tech for Google Maps

Google demonstrated the upgrades for its new Google Maps app – Immersive View and Live View technology at an event in Paris, France. The Immersive View for Maps was first previewed last year and it uses AI and AR to bring billions of street view and aerial images to create a digital 3D model of the real world that can be used to guide users on a route.

Live View adds AR elements on to the real world, helping you to understand better about your surroundings. The Live View and Immersive View on Maps shows you landmarks, street name, ATMs, restaurants and shops around you and also intimates the way for your destination all in the screen by adding pop-up like elements. It may even show you about the business-time of a shop on your display and the shop’s ratings. It really makes easier to walk through the streets.

The Live View feature also displays aerial views of buildings, monuments and tourist places, where users can get a 3D view of places, by virtually soaring over the buildings on the screen. For creating realistic scenes as 3D models, Google uses an advanced AI technology called neural radiance field (NeRF) that transforms photos into 3D representations.

Indeed, a “time slider” shows an area at different times of day and with weather forecasts. So, if you are visiting Taj Mahal at day, you can also see how it looks at night. You can actually do that, if the feature comes to India. Currently the Immersive & Live View feature are only available only in five cities – London, Los Angeles, San Francisco, New York and Tokyo.

Also to mention, Google Maps can identify landmarks, not only in real time, but also on saved video.

And over next few months, the features are extending to more than 1,000 new airports, train stations, malls in Barcelona, Berlin, Frankfurt, London, Madrid, Melbourne, Paris, Prague, Sao Paulo, Singapore, Sydney and Taipei.

“You can also spot where it tends to be most crowded so you can have all the information you need to decide where and when to go,” Google said.

Advanced Search – MultiSearch, Bard

Cameras have become a powerful way to explore and understand the world around you. Google had extended the search options letting people to search via images, and video.

In the coming months, users will be able to use Lens to “search the screen” on androids globally. With this technology, you can search what you see in photos or videos across the websites and apps you know and love, like messaging and video apps — without having to leave the app or experience.

With multisearch, you can search with a picture and text at the same time — opening up entirely new ways to express yourself. Today, multisearch is available globally on mobile, in all languages and countries where Lens is available.

After multisearch by Google, Google takes on AI to compete with ChatGPT, unveiling Bard – Google’s Artificial General Intelligence (AGI). Bard equips Google’s language and conversation model called “LaMDA”, that the firm introduced in 2017.

Related Posts

Bard will be sourcing the world knowledge from the web, and renders it in user-specified way, able to interpret, compare and comprehend the information and delivering them in a clearly understandable search-result. Indeed, Bard is a step ahead of ChatGPT by being capable to comment or inform about latest news and events.

Google Translate with AI & ML

The tech giant also introduced upgrades to Google Translate, which makes the app more contextual for users with examples in the intended, translated language. So whether you’re trying to order bass for dinner, or play a bass during tonight’s jam session, you have the context you need to accurately translate and use the right turns of phrase, local idioms, or appropriate words depending on your intent, with languages including English, French, German, Japanese and Spanish rolling out in the coming weeks.

By enclosing translation with Google Lens, which enables users to search what they see using the camera, Google now enables to blend the translated text into complex images using machine learning, so it looks and feels much more natural. It’s now available on Android phones with 6GB+ RAM.

After Google panicked about the arrival of ChatGPT, is now on full swing to enroll new useful, adaptive and interesting features to every apps and services of its own, entitling the giant’s capability of fetching fruitfulness to people.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).