The trending ChatGPT maker OpenAI recently released its new tool to find if a text is AI-written or not, to avoid plagiarism and to differentiate human and AI-written texts especially in educational institutions.

OpenAI’s ChatGPT – the AI chatbot capable of answering out any of your questions, has been the hottest topic of the tech-world now. Its potential prevalence that it may impose in the near future, has perturbed the strongest-brand of the world indeed, Google. Google announced “Code Red” to come up with a new AI chatbot of its own to compete with ChatGPT. Microsoft’s investment on ChatGPT also might have concerned for the tech-giant.

Albeit possessing lucrative growth and usage in the real world, ChatGPT had already raised heeds on the need of regulating the AI to have less to no impact on educational sector, as students might embezzle the tech for their assignments, that might eventually hurt learning.

Sam Altman, OpenAI’s CEO, said there could be ways for the company to help teachers spot text written by AI like this.

AI Text-Classifier

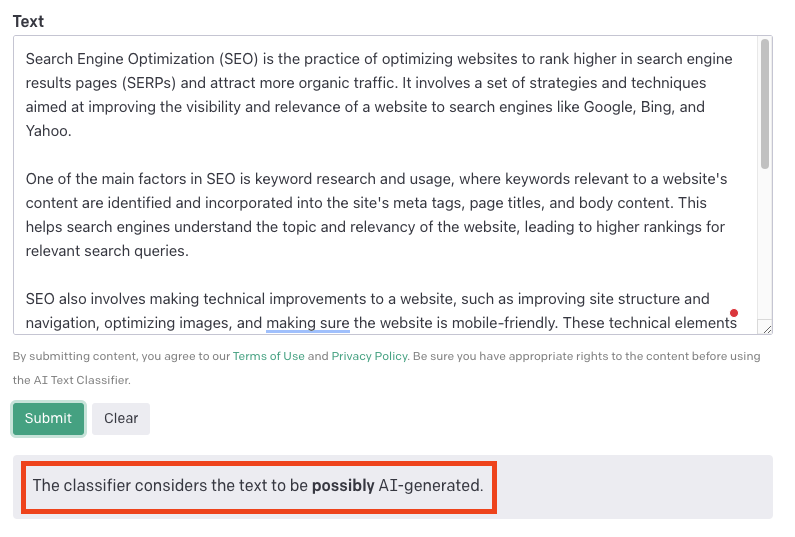

Addressing this, OpenAI had introduced a tool called “AI Classifier” that is trained to distinguish between human-written and AI-written text.

The tool can inform mitigations or false claims that AI-generated text was written by a human. For example, it can find if AI is used for academic purposes, and using AI Chatbot as a human.

AI Classifier being the new ‘work-in-progress’ tool, however, can make mistakes and the makers themselves says that the classifier is not fully reliable, putting some limitations before going for it. The AI tool is not accurate and can tell on ‘probably’, ‘possibly’ or ‘likely’ level.

On their evaluations on a set of English texts, the classifier correctly identifies 26% of AI-written text as “likely AI-written” but also, incorrectly labels human-written text as AI-written for 9% of the time (false positives).

Limitations of the AI Classifier

Here are the limitations, according to the AI Classifier’s creators.

1. The AI classifier is unreliable on texts below 1,000 characters. The text should have more words and characters for the classifier to be more ‘probable’. There’s no guarantee for the AI to do perfect.

2. The tool works good only for English texts, and is unreliable on codes.

Related Posts

3. Factual or predictable answers cannot be differentiated using the AI classifier. For example, seven-wonders of the world will have the same answer in both AI and human written texts and is difficult to detect in that case, the blog says.

4. Cases of fault accusations like labelling human-written texts as AI-written is also possible sometimes.

5. AI-written texts which are edited by humans evades the classifier’s purpose. The classifier is incapable to identify such edited texts.

6. Classifiers based on neural networks are known to be poorly calibrated outside of their training data. For inputs that are very different from text in our training set, the classifier is sometimes extremely confident in a wrong prediction.

GPTZero – AI-text finder

Classifier by OpenAI is not the first of the kind, as a student from Princeton University, Edward Tian came out with a tool called “GPTZero”, which serves the same purpose of detecting if a text is AI-written or not.

Highlighting the site as “Human Deserve the Truth”, GPTZero’s website is built for educators, it noted.

Teachers, professors, and any educational staffs can try the website for free, where you just need to enter the texts or can choose a file to disclose the author.

AI chatbots rapidly increase in number, and their chances of being exploited for malice intends also swells, calling the need of the tech to be guided on the right track.

“Our work on the detection of AI-generated text will continue, and we hope to share improved methods in the future, the creators write.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).