Google I/O event held at California, US on May 10 was flooded with new products and features starting from Pixel devices like Pixel Fold, Pixel Tablet to AI features in search, upgrading and opening Bard to everyone, and other bunch of AI capabilities in its services. With several AI features interesting and impressive enough, Google took a worthy shot, blending every product and services of itself with its generative-AI.

The company’s CEO Sundar Pichai started off the keynote and here are the big exciting announcements from the event.

3 New Pixel devices – Pixel 7a, Pixel Fold & Pixel Tablet

Google launched three new hardware, a smartphone, a foldable and a tablet, that focused to be the core of the keynote.

Google teased its mid-range smartphone A-series with new Pixel 7A at $499 for the base version. It runs on Tensor G2 chip along with a 6.1-inch OLED 90Hz display.

Pixel Fold is Google’s first entry into the foldable market competing Samsung Z Fold series. The $1799 foldable of Google features a big 5.8-inch OLED outer screen that unfolds for a larger 7.6-inch tablet-like OLED display. Equipped with Tensor G2, triple rear-camera at 48 + 10.8 +10.8 MP and 8MP front camera and a decent 4821 mAh battery life, Pixel Fold looks impressive.

Following foldables, Google gives entry in tablet-market with its Pixel Tablet. Pixel Tablet is not just a tablet but also a smart display thanks to its magnetic charging dock that indeed doubles as a speaker. The $499 tablet is 11-inches wide and likely as other two devices, Pixel tablet too equips Tensor G2 chip.

All the devices are now available for pre-orders from Google Store.

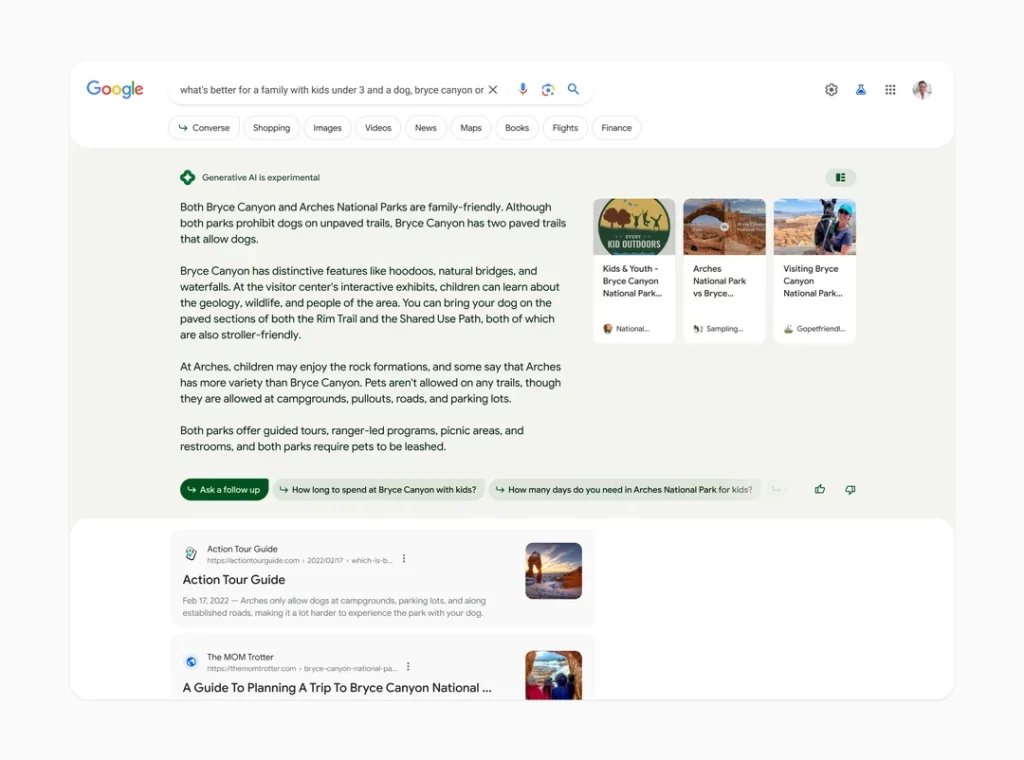

Google Search with AI features

Google is much awaited for its presence and to showoff its capabilities in AI, if it might outperform ChatGPT-powered Microsoft Bing.

In its AI advancements, Google enhances its Google Search with a new element called AI snapshots. Once this new feature called ‘Search Generative Experience’ is available to access, you’ll get answers from AI directly in the search-page at the top section before the conventional google results. This piece of content is fed by Google’s generative-AI and can proffer answers to any question and adds more context to your question.

The AI snapshots are powered by Google’s PaLM 2, the updated version of the firm’s large language model. This PaLM 2 was also unleashed on May 10 and is already powering 25 Google services including Bard.

Bard goes worldwide

Google Bard was released on February of 2023 as an experiment, but the AI chat was restricted to many countries and also with a waitlist.

Google now opens Bard to everyone out there, regardless of if you were on waitlist. Bard gets new features and functions such as an export option for exporting the generated text to Google Docs or Gmail, new dark mode, and several visual search features as well.

Bard indeed appends Adobe’s AI image generator for providing text to image generation within the chat itself. And it also is updated with few language supports and integrations with third-party services like OpenTable and Instacart.

AI in Android devices

There’s a whole lot to babble on in this sub-heading and we will try to cover it up briefly.

Google launches new AI enabled features in its Android devices including Magic Compose in Messages, a more customizable lock-screen, Emoji wallpaper, cinematic wallpaper and more.

Magic Compose will offer suggested responses based on the context of your messages and will let you to use AI to seem smart with different writing styles and knowledge.

With Emoji wallpaper, you can customize your device using your favorite emoji combinations, patterns and colors. You can also have images generated by the AI as wallpaper.

Cinematic wallpaper, in the case, uses on-device machine learning networks to transform your favorite photos into stunning 3D images. The cinematic effect will come to life when you unlock or tilt your device. That looks impressive!

There’s also Ultra HDR mode and night mode support from Android to be used in social-media apps like Instagram. Google also updates Find My Device feature of android and ensures more safety by incorporating AI to prevent spam messages. It protected users from 100 billion suspected spam messages in 2022, Google says.

Moreover, Google optimized over 50 of its apps including Gmail, Photos and Meet for large screens like TVs. It will allow for full-screen view and will moderate the content according to the screen-size, for TV and foldables too. For example, a page on Google chrome will be different in mobile, foldable and chrome, adaptive to the display.

Related Posts

AI in Google Apps

This might take a while likely as the android-part, if we do elaborate the features one by one. Google incorporates or blends AI with every of its products and is more profound in Gmail, Maps, and Photos, as we do use these more often.

Gmail – Notable AI stuff in Gmail is “Help me write”, which brings PaLM-2 generative-AI directly next to your Send button in the ‘Compose’. It works like this. Say for example, when you want to reply to any mail, click on ‘reply’ from that mail and tap on the magic-stick-like button next to ‘send’. You’ll then have to type in the context of the reply-mail and boom, AI will write the mail for you, pulling off the details from the original mail automatically. This was interesting!

Maps – After announcing 3600 views for monumental tourist places in Maps, Google now brings immersive view for routes in Maps. This feature uses AI to create a high-fidelity representation of routes. Say you’re in New York City and you want to go on a bike ride. Maps has given you a couple of options close to where you are. The one on the waterfront looks scenic, but you want to get a feel for it first, so you click on Immersive View for routes. It’s an entirely new way to look at your journey. You can zoom in to get an incredible bird’s eye view of the ride.

There’s more information available too. You can check air quality, traffic and weather, and see how they might change. Immersive View for routes will begin to roll out over the summer, and launch in 15 cities by the end of the year, including London, New York, Tokyo and San Francisco.

Photos –A new Magic Editor experience in Google Photos was showcased in the event, which eases the editing process of photos to a whole new level. You can enhance the sky, move a person or object, remove a part of object from the photo and do more with the new AI-powered editing feature in Photos. It will first be available for few Pixel users later this year.

New and improved Wear OS

Google announced that a new and updated version of its watch OS – Wear OS 4 is coming this year with improved battery life, back up and restoring options and new accessibility features.

Apps like WhatsApp is debuting this year on Wear OS and Google improves the experiences of its apps such as Gmail, Calendar and more for your Wear OS smartwatches.

Wear OS 4 is also getting better smart home integrations that will let you adjust lighting, control media, and see animated previews of camera notifications, from your wrist.

Duet AI for Google Workspace

In additional to “Help me write” feature in Docs and Gmail, Google innovates “Duet AI” for Google Workspace that provides a handful of AI tools for in Slides, Meet, Sheets and more.

The AI features vary according to the platform – generate images from text descriptions in Slides and Meet, create custom plans in Sheets and more. Duet AI, however, will now only be available to those who sign up for its waitlist.

PaLM 2 – the backbone of Google’s AI

Google’s latest large language model PaLM gets upgraded to PaLM 2 version, which builds fundamental research and infrastructure of Google products. Over 25 products and features now equip PaLM 2 for blending the AI with them. PaLM 2, further branches to several sub-models deployed for unique uses.

Basically PaLM 2 is stronger in logic and reasoning than its previous versions and will support 100 languages. Read fully about Google’s biggest ever AI model – PaLM 2, here.

Google Search’s new Perspectives feature

Google Search trying to be more convincing and reliable, will fetch the answers from actual humans. Google must’ve figured out that a huge number of users are appending “Reddit” to their searches because it’s rolling out a new ‘Perspectives feature’ that sources answers from Reddit, Stack Overflow, YouTube, personal blogs, and other sites.

Google got incited after the launch of ChatGPT, and had innovatively enhanced dozens of new features with AI. While still many features had to be rolled over later this year, Google’s kickstart is really appreciable. There are lot of other features left over here, which we will address in the upcoming articles.

Hope you find the page useful!

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).