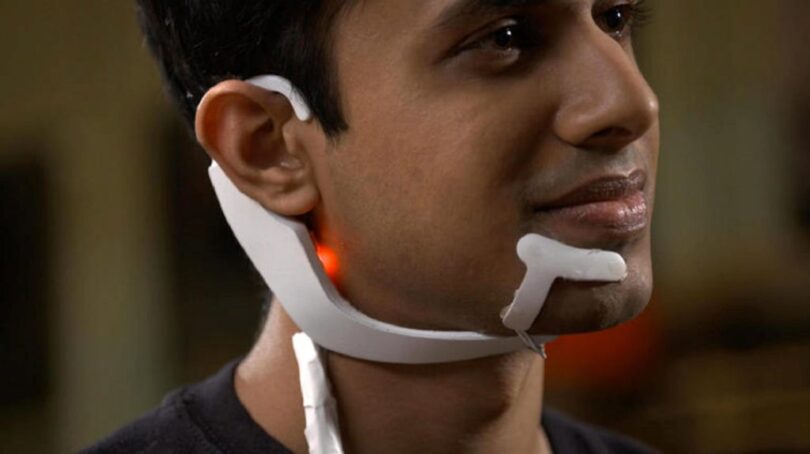

Arnav Kapur, an Indian-born man in MIT develops a device named “AlterEgo” that allows for voiceless conversation between you and internet or AI or voice assistants. It’s like connecting your brain with internet seamlessly.

Researchers are constantly into integrating technology with human life, striving to make it seamless and as natural as possible in our day-to-day lives. While smartphones have started to take away our time, posing as a distraction for productivity, firms are in works to innovate products / services that’s other way around – that doesn’t seem like a distraction. Instead, the device feels naturally bounded with reality; say for example, humane-wearable that has no display but is voice-interactive.

Likely, this device by MIT student connects you with internet, also letting you to converse with AI voice assistants, but ‘without using your voice’ and without any display.

AlterEgo

In a new approach, AlterEgo, by which the wearable is called, allows to converse with machines or AI without even opening your mouth. Instead, by saying it to yourself silently, similarly like mumbling.

“AlterEgo is a non-invasive, wearable, peripheral neural interface that allows humans to converse in natural language with machines, artificial intelligence assistants, services, and other people without any voice—without opening their mouth, and without externally observable movements—simply by articulating words internally,” writes MIT.

The device seeks to augment human intelligence with internet and AI by enabling a natural extension of user’s own cognition to technology. The wearable system captures peripheral neural signals when internal speech articulators are volitionally and neurologically activated, during a user’s internal articulation of words. This enables a user to transmit and receive streams of information to and from a computing device or any other person without any observable action.

How it works?

The wearable system reads electrical impulses from the surface of the skin in the lower face and neck that occur when a user is internally vocalizing words or phrases – without actual speech, voice or discernible movements.

By understanding what you are saying internally, the device would connect to internet or AI and fetch you answer. The feedback to the user is given through audio, via bone conduction, without disrupting the user’s usual auditory perception, and making the interface closed-loop. This enables a human-computer interaction that is subjectively experienced as completely internal to the human user – like speaking to one’s self.

AlterEgo is not a mind-reading device, but reads signals from your facial and vocal cord muscles when you intentionally say words or phrases silently. And in return, the information is conveyed through bone conduction.

Components of AlterEgo

The AlterEgo system consists of the following components:

- A new peripheral myoneural interface for silent speech input which reads endogenous electrical signals from the surface of the face and neck that are then processed to detect words a person is silently speaking.

- Hardware and software to process electrophysiological signals, including a modular neural network-based pipeline trained to detect and recognize word(s) silently spoken by the user.

- An intelligent system that processes user commands/queries and generates responses

- Bone conduction output to give audio information back to the user, such as the answer to a question, or confirmation regarding a command.

Applications

“A primary focus of this project is to help support communication for people with speech disorders including conditions like ALS (amyotrophic lateral sclerosis) and MS (multiple sclerosis),” adds MIT.

Besides, AlterEgo provides a pathway for humans to seamlessly integrate with computers and enhance the cognitive abilities to the potential of internet or AI. The system has implications for telecommunications, where people could communicate with the ease and bandwidth of vocal speech with the addition of the fidelity and privacy that silent speech provides.

The system acts as a digital memory; the user could internally record streams of information and access these at a later time through the system.

Brain behind AlterEgo

AlterEgo was designed by Arnav Kapur, Utkarsh Sarawgi and Eric Wadkins. Arnav Kapur, the Delhi-born man, is currently pursuing PhD in Media Arts and Sciences at MIT. He had also been featured in TIME’s 100 Best Inventions of 2020.

Utkarsh Sarawgi is Indian-born man, a former research student of MIT, now working at Apple as AI/ML engineer. He graduated from Birla Institute of Technology and Science, Pilani, India in the field of B.E. Electronics and Instrumentation Engineering.

Eric Wadkins is machine learning lead of the AlterEgo project and an M.Eng. student in Computer Science/Artificial Intelligence.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).

Kindly add ‘The Inner Detail’ to your Google News Feed by following us!