The field of artificial intelligence continues to evolve at a breakneck pace, with Google leading the charge in innovation. At the forefront of AI research, Google consistently pushes the boundaries of what’s possible, developing tools that make life easier, smarter, and more connected. From advanced multimodal assistants to personalized learning solutions, the company is exploring new horizons to integrate AI tools into every facet of daily life.

This article delves into some of the most exciting tools being developed by Google AI Labs, including Project Astra, a revolutionary AI assistant that can analyze and interpret surroundings, and Learn About, an educational assistant that simplifies complex topics. These innovations reflect Google’s commitment to solving real-world problems and empowering users with technology that’s intuitive and impactful.

Google Astra – AI Assistant

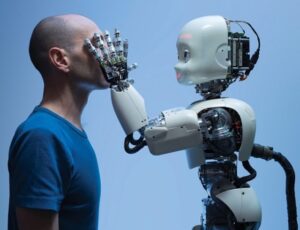

Google is aiming to bring Jarvis of Iron Man’s to all its users and that’s what ‘Project Astra’ is about. Project Astra is a multimodal AI assistant that’s being built by Google’s DeepMind unit. It can see, analyze and interpret our surroundings so that users can ask about anything around them to Astra.

In a video demoed at the event, Google showed how the AI helps in answering to people for questions on their surroundings through video and audio. For example, it helps people remember where they had kept their glasses, answer what a specific part of speaker is called when the speaker is shown to the AI.

Astra is still in prototype stage now, but it gives a glimpse of what it will be when it’s released.

Read more about Astra AI here.

Learn About – Learning Companion

Google’s “Learn About” is an AI-powered learning companion designed to help users explore complex topics. It functions like a conversational chatbot or search tool, but is personalized to individual learning capabilities and needs. The tool opens with the prompt, “What would you like to learn today?” and then users can input any topic, subject, file, or image. This allows users to delve deeper into subjects, utilizing Google’s experimental AI technology.

The interactive AI model provides in-depth responses, even for one-word terms. Each answer is tailored to the specific user’s level of knowledge, making it user-friendly and creating personalized conversations. Users are guided through challenging concepts with interactive guides, images, videos, and articles, creating a flexible and engaging learning environment. To start, users sign in with a Google account and can begin by asking questions, uploading images or documents, or exploring curated topics. For example, when asked about Silvia Federici’s concept of the “feminization of poverty,” Learn About provided a definition and detailed contributing factors with an interactive list. This highlights the tool’s ability to provide in-depth explanations and interactive learning experiences.

Illuminate – AI-generated Podcast

Google Illuminate is a similar podcast maker product to NotebookLM in many ways, but completely different in one crucial feature — its focus is purely on the podcast. Both of these AI tools are designed to make it quicker and easier to distill knowledge into friendly digestible chunks and present it in a way that is convenient for humans. Illuminate is currently only available to a select group with a waitlist to gain wider access. It isn’t clear if it will ever become more than just an experiment.

The principle behind these new tools is the fact that AI is spectacularly good at reading through large volumes of text, video or audio and then producing insightful summaries. What gives Google the edge in this field is that its Gemini AI model has a huge context window of double its rivals, at 2 million tokens. A context window is essentially how much information the AI can hold in a single session before it starts to forget or degrade.

Whisk – Photo-to-Photo

Google’s “Whisk” is an experimental AI tool that allows users to generate unique images by using other photos as prompts. Unlike traditional image generators that rely solely on text prompts, Whisk enables users to drag and drop pictures into the tool, which then “remixes” them to create new visuals. Users can provide images to define the subject, scene, and style of their desired output and can also input text prompts if desired. Powered by Google’s Gemini AI and Imagen 3, Whisk captures the essence of the input images to produce creative and novel results. It’s designed for rapid visual exploration, allowing users to experiment with ideas in innovative ways.

Jules – Personalized Education

According to Google, Jules is able to create multi-step plans in response to a user’s prompt to modify a codebase. It is worth noting that, based on the limited information provided by Google, at the moment Jules seems to require rather specific instructions as to what it is asked to do. This means you will need a good understanding of what will fix a bug or extend a feature and cannot count on Jules to be able to find and fix bugs on its own.

Jules analyzes user input along with the relevant codebase and creates a detailed plan outlining the changes it intends to implement. By breaking down each step in detail, Jules ensures developers have full control over its actions, enabling them to provide feedback or request modifications to the proposed plan.

Project Mariner – AI Agent

Google’s DeepMind has introduced “Project Mariner,” an AI agent capable of controlling web browsers to complete tasks via a Chrome extension. This tool represents a step into the “agentic era” of AI, where systems can understand the world better and perform complex tasks with user supervision. Project Mariner can handle various types of content, including text, images, video, and audio, in real-time. It remembers past interactions, allowing for more personalized and context-aware assistance. Currently, Project Mariner is a research prototype and not yet available for public use.

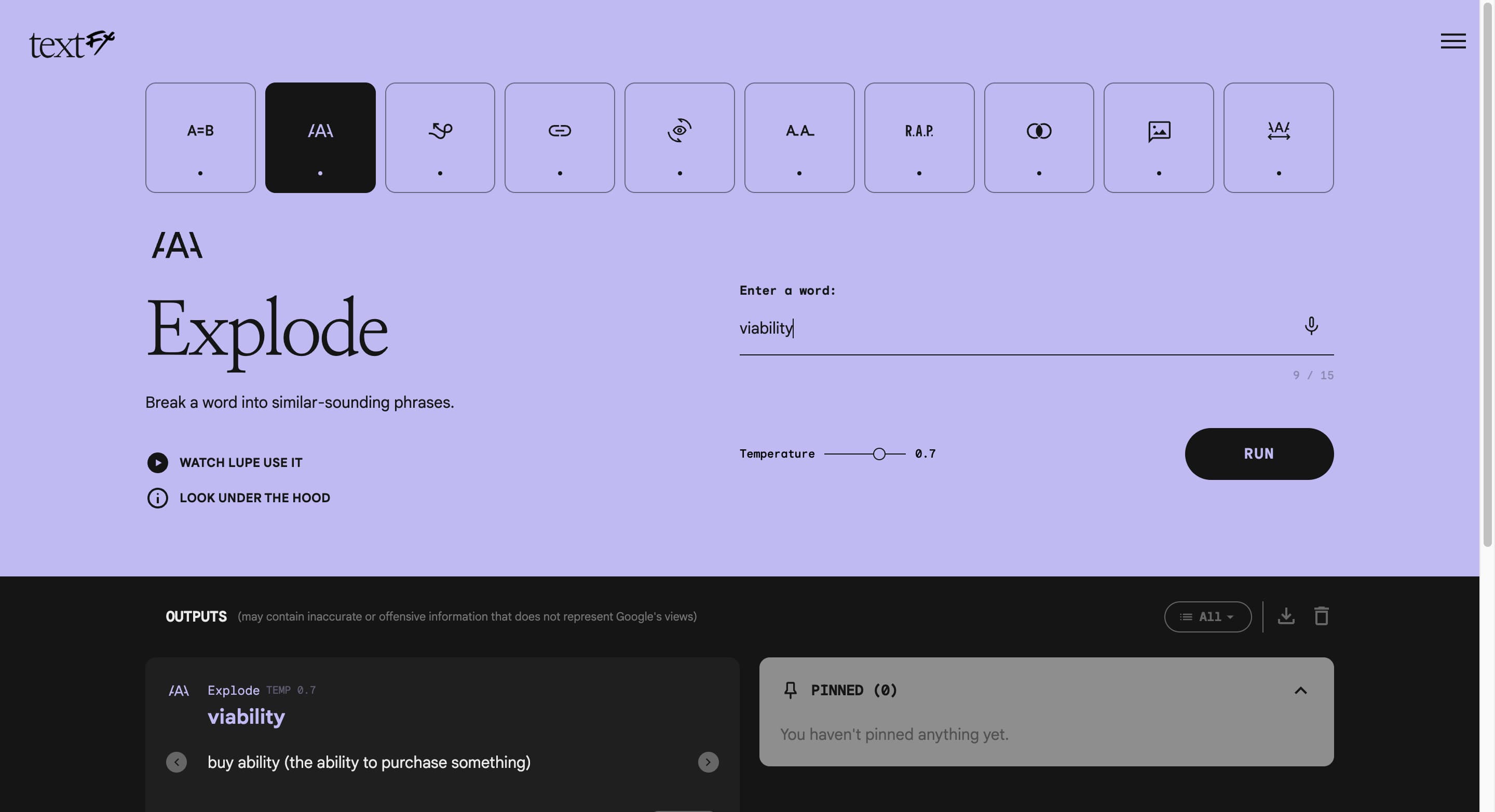

TextFX

TextFX, a suite of AI tools developed in collaboration with Lupe Fiasco, is an experimental project exploring how AI can expand human creativity. The tools were created using Google’s PaLM API and are based on Lupe Fiasco’s unique linguistic “tinkering” techniques, which involve deconstructing and reassembling language. TextFX also allows users to adjust the model temperature, influencing the level of creativity in the outputs. The prompts are saved as strings and sent to the PaLM 2 model via the PaLM API, which then generates text based on the user input. The results are then parsed, processed, and displayed on the frontend.

The core of TextFX lies in its ten prompts, each designed to explore different creative possibilities with words, phrases, or concepts. These prompts include SIMILE, EXPLODE, UNEXPECT, CHAIN, POV, ALLITERATION, ACRONYM, FUSE, SCENE, and UNFOLD. The tool uses a few-shot learning approach where it recognizes patterns from a small set of examples and replicates them for new inputs. The prompts are carefully crafted strings of text that guide the LLM to behave in a certain way. TextFX is available for anyone to try, and the code and prompts are also open-sourced.

Food Mood – Recipe by AI

Google Arts & Culture has launched “Food Mood,” an experimental AI tool that generates fusion recipes by combining two different cuisines. Created by artists at the Google Arts & Culture Lab, Food Mood uses Google’s Gemini 1.0 Pro via Vertex AI to produce unique dishes. This tool allows users to choose the type of dish they want (starter, main, or soup), specify the number of servings, and select two cuisines to combine.

Food Mood generates a recipe with a creative name, step-by-step instructions, cooking time, pro tips, and an AI-generated photo of the dish. The recipes are not developed by chefs or tested in kitchens. While the tool provides a fun way to experiment, users are advised to prioritize food safety and use their own judgement. Food Mood is not designed to challenge trained chefs, but it demonstrates Google’s advances in AI capabilities by inventing recipes that don’t already exist. The tool is intended to inspire creativity in the kitchen, similar to how other sites allow users to search for recipes using ingredients on hand.

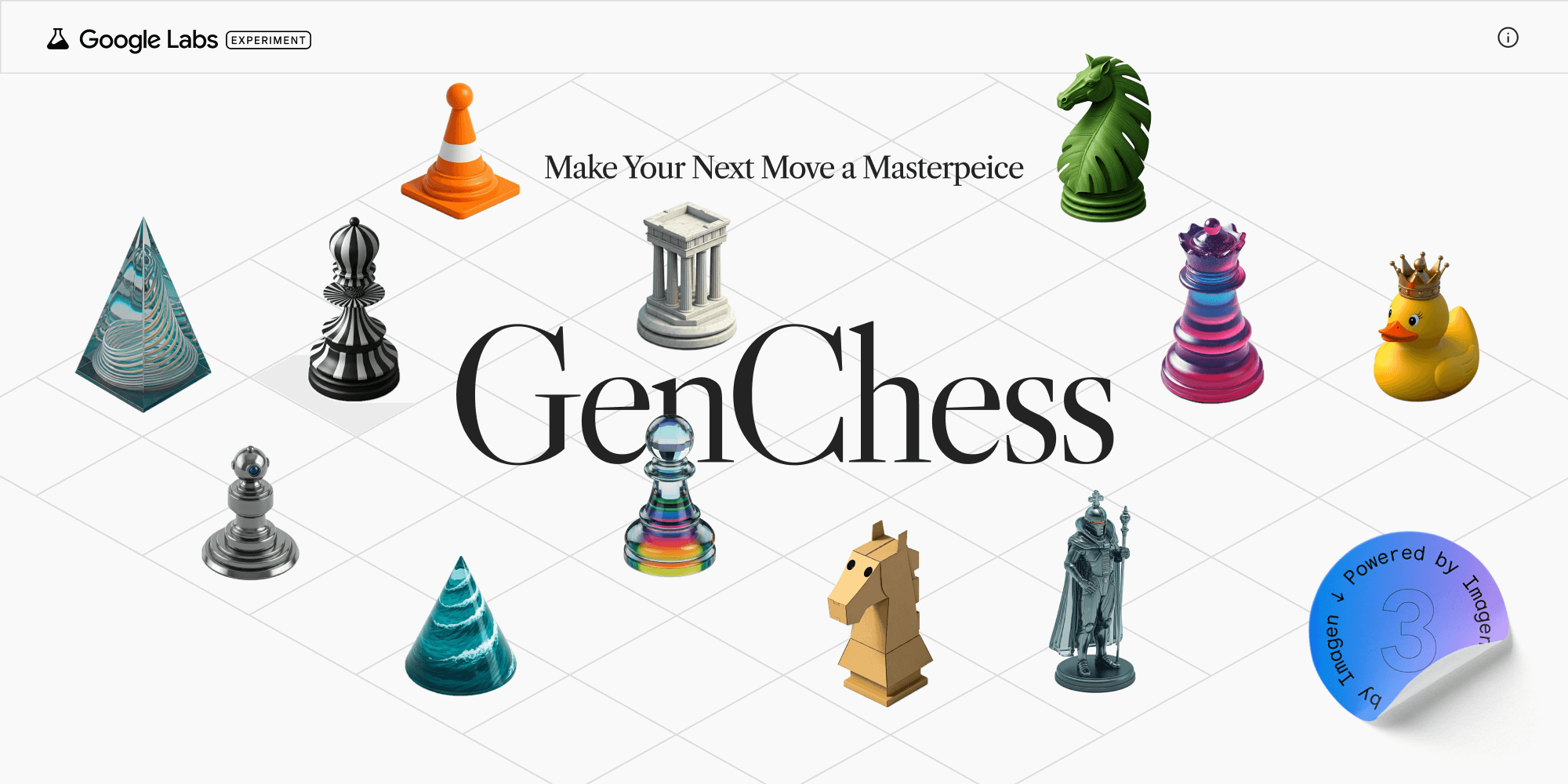

GenChess

Google has launched a new chess website called “GenChess” that uses generative AI to create custom chess pieces. The game begins by allowing users to input a short description of what they want their white pieces to look like, and the game uses its Imagen 3 AI model to generate the set. Then, it automatically generates a related theme for the black pieces. For example, if the user asks for sci-fi-themed pieces, the AI might create fantasy-themed pieces for the opponent.

GenChess is functional but not fully featured; it offers three difficulty settings and two time controls. However, it lacks features such as the ability to review past moves or see captured pieces. The game’s default isometric view can be disorienting, although a top-down view is available in the settings. GenChess was released in conjunction with the 2024 World Chess Championship, where Google is a main sponsor. Along with GenChess, Google has announced that a chess bot will be available within Gemini in December for Gemini Advanced subscribers. This will allow users to play by typing their moves, with Gemini displaying an updated chessboard as the game progresses. However, it is not clear whether the Gemini bot will have more advanced chess capabilities.

GenType

GenType is an experimental AI tool from Google that uses generative AI to create entire alphabets from a single user input. This tool was inspired by a Google teammate who wanted to use Imagen to help his children learn the alphabet by generating letters from familiar objects. GenType uses Google’s Imagen model and a simple prompt recipe to generate each letter of the alphabet. The user provides a single input describing the material or object they want the letters to be made out of, such as “grape jelly, on toast, aerial shot”. The tool then uses this input to create each letter of the alphabet from A to Z, sending out 26 separate requests, one for each letter. This results in a complete set of unique letters that are all consistent with the user’s chosen theme.

Users can then type out phrases with the custom alphabet, save and copy their favorite phrases or the entire alphabet, and regenerate individual letters if they are not satisfied with the initial result. The key to a successful alphabet generation is specificity in the prompt, particularly in describing the foreground, background, and style of the letters. For example, a user could specify “ladybugs” for the foreground, “on a green leaf” for the background, and “aerial photo” for the style. The GenType tool is flexible and has been used for a variety of purposes, including jewelry design, title sequences, type design, event invitations, and posters. GenType is intended to make AI more accessible and empower creativity.

Read more about Upcoming Google Projects.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).

Kindly add ‘The Inner Detail’ to your Google News Feed by following us!