Songs & music have been a constant companion to mankind, pleasing, enchanting and even lessening the soul. Despacito & Gangnam style adds flavor to “Music has an unbreakable bond with the human brain”, though the meaning is unnoted. This bond is what encouraged researchers from Netherlands & India to experimentally design an AI that could identify the songs that you are hearing on your headphones, just by checking your brainwaves.

Music identifying AI

Researchers from the Human-Centered Design department at Delft University of Technology in the Netherlands and the Cognitive Science department at the Indian Institute of Technology – Gandhinagar correlated for this study.

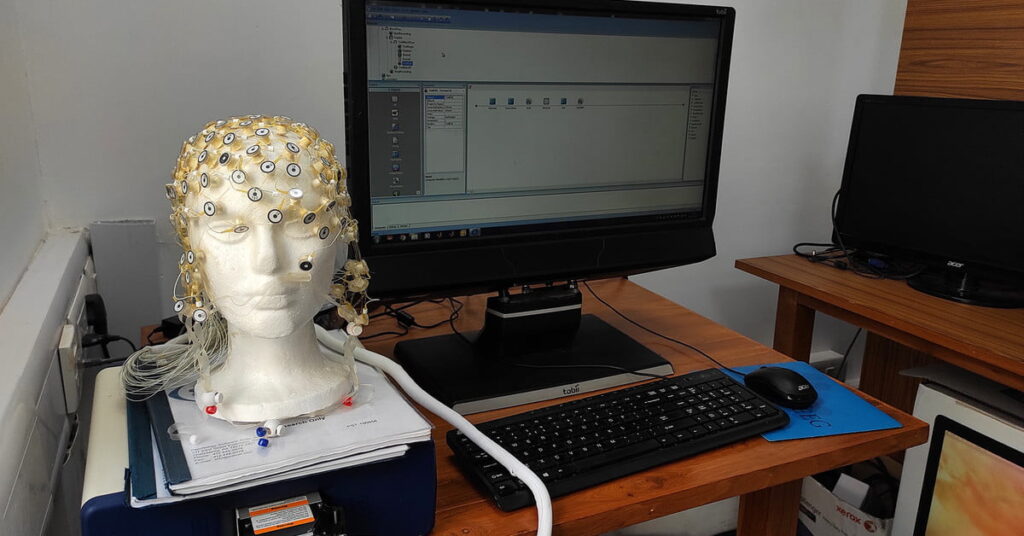

20 people were recruited and instructed to hear 12 songs on headphones. To aid their focus to listen the song, the participants were left in a dark room & blindfolded. EEG (electroencephalogram) cap was worn by everyone to access their brain activity while hearing the songs.

The songs played to the onlookers were a mix of Western and Indian music with a number of genres. The songs they are listening to were correlated with corresponding song at the hand of artificial neural network to identify them. When the resulting algorithm was tested on data it hadn’t seen before, it was able to correctly identify the song with 80% accuracy – entirely based on one’s brainwaves.

“This way, we constructed a larger representative sample for training and testing. The approach was confirmed when obtaining impressive classification accuracies, even when we limited the training data to a smaller percentage of the dataset”, Krishna Miyapuram, assistant professor of cognitive science and computer science at the Indian Institute of Technology Gandhinagar.

“Our research shows that individuals have personalized experiences of music,” Miyapuram said. “One would expect that the brains respond in a similar way processing information from different stimuli. This is true for what we understand as low-level features or stimulus-level features. [But] when it comes to music, it is perhaps the higher-level features, such as enjoyment, that distinguish between individual experiences.”

The future objective of the project remains to uncover and map the relationship between EEG frequencies and musical frequencies. This may clarify whether greater aesthetic resonance is accompanied by greater neural resonance. In simple words, the result shows greater correlations of finding the song for those who have greater tenderness for music. This can be put forth to predict how much a person will enjoy a piece of music just by looking at their brainwaves. And at the edge, how humans look for music first.

Read this: Selfies could be used to detect heart disease – AI study reveals

Future of Reading brainwaves

Starting out from music-reading, short-term applications envision suggesting music to individuals based on their brain reaction. Lomas suggested that this could lead to powerful medium-term applications for obtaining information about the “depth of experience” of a person dealing with media. With the help of tools for brain analysis it can (and should) be possible to predict exactly how busy a person is while they are watching a movie or listening to an album, for example. A brain-based level of engagement could then be used to enhance certain experiences. Do you want to make your film more engaging for 90% of the audience? Optimize This Change of scene The on.

And the confined aim would be “transcribing contents of the imagination to viewable form – thoughts to text; which could be the great future of brain-computer interfaces”, Lomas added, projecting 20 years ahead from now.

AI reading emotions of humans

This brainwave reading isn’t new for the tech-world, as several researches bring off the topic arrowed for different purposes. One such research, for instance held developing AI-empowered robots capable of reading humans’ emotions. Focused into a separate study, ‘Emotional Intelligence’, robots accompany humans on tasks & would act or react based on human’s emotions. Read it fully here.

Related Posts:

- This Gaming Headset scans your brainwaves & notifies your weaknesses

- AI recreates ancient sculptures, statues – Artists work

- Third Generation of AI – Neuromorphic Computing: Explained

References: