A firm is working on incorporating AI to robots to make them read and understand human emotions and respond to them appropriately, bridging the gap between humans and robots.

Robots are intruding into fields, being an assistant or companion to mankind, wherein its potential is hugely acquired into the possible use cases such as robots in the food industry, robots for manual help and so on. To make it more convenient for humans, robots are incorporated with artificial intelligence for educating it to grasp human emotions and using that knowledge for everything, from interacting to business, marketing campaigns and health care. You’ll find how and why for the above subject in this page.

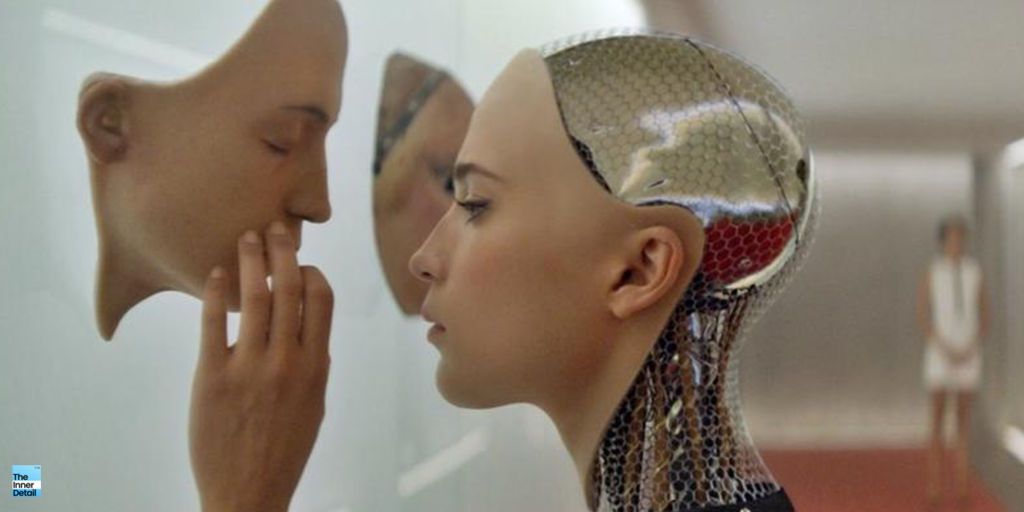

Robots reading Human Emotions

Researchers found that humans could recognize fellowmen’s stress, aggression, excitement by watching their movements, even if they couldn’t see their facial expressions or hear their voice. This understanding by humans on basis of people’s way of moving, besides their expressions and accent could be taught to robots, developing it to learn and understand emotions, a secluded field of which concentrates on the study: “Emotional Intelligence”.

Emotional Intelligence, also called Affective Computing owes its origin back at 1995, when MIT Media lab professor Rosalind Picard published “Affective Computing”.

“Just like we can understand speech and machines can communicate in speech, we also understand and communicate with humor and other kinds of emotions. And machines that can speak that language — the language of emotions — are going to have better, more effective interactions with us. It’s great that we’ve made some progress; it’s just something that wasn’t an option 20 or 30 years ago, and now it’s on the table”.

“One of the main goals in the field of human-robot interaction is to create machines that can recognize human emotions and respond accordingly.”

Read this: A brief overview of Robotics

The Actual Study

In motive to teach robots, the emotions we (humans) possess, should first of all, convene the way people move or interact on different emotions. In the aspect, the study conducted by researchers form Warwick Business School, University of Plymouth, Donders Centre for Cognition at Radboud University in the Netherlands, and the Bristol Robotics Lab at the University of the West of England, included noticing children by filming them playing with a robot and a computer built into a table with a touchscreen at its top.

The footage was played to a team of psychologists and computer scientists, 284 in numbers to study the children’s interactions (cooperative, competing, dominant), and emotions (excited, boredom, sadness).

A second group of analysts watched the video reduced to ‘stickman’ figures, showing only the movements, that cent percent matched the previous. They then came out that children express same emotional label which is easily recognizable, not as a guessing but a mere conclusion.

Check this: Latest Robotic Innovations that awes the world

The researchers then trained a machine-learning algorithm to label the clips, identifying the type of social interaction, the emotions on display, and the strength of each child’s internal state, allowing it to compare which child felt more sad or excited.

“Our results suggest it is reasonable to expect a machine learning algorithm, and consequently a robot, to recognise a range of emotions and social interactions using movements, poses, and facial expressions. The potential applications are huge.”

The aim is to create a robot that can react to human emotions in difficult situations and get itself out of trouble without having to be monitored or told what to do.

Related Posts

Currently available Emotional Intelligence Robots

Firms have started equipping Emotion AI robots in fields like marketing, business and health care.Full-spectrum emotion AI will be needed to cope with the complexity of true human interaction, and that is the goal of organizations such as Affectiva. So far, the company has trained its algorithms on more than nine million faces from countries around the world, to detect seven emotions: anger, contempt, disgust, fear, surprise, sadness, and joy.Affectiva focusses on marketing, to find people’s way of reacting to ads and thus going in hands with firms for better ad making.

Expper Tech’s ‘Robin’ was kind of robot roaming in hospitals and assigned for providing fun-filled emotional support to children undergoing treatment.

With elderly population expected to 1.6 billion (about 17%) in 2050 (roughly twice the proportion than that of today), constant support and care is what needed for them, which will include emotionally intelligent robots fulfilling the purpose.

Extent of manipulating the emotional robots to act on one’s favor is an atom as compared to its effective functioning abilities; which surges Emotion AI into top growing techs in the era. Further developments in the robotics led to not just reading and understanding emotions, but exhibiting the emotions itself in its robotic face, by this robot Ameca.

Hope the page was useful! Drop your thoughts on this Robotic innovation in the comments…

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).

References: