A developer had exploited OpenAI’s GPT-3 technology to create a chatbot that enables users to talk and chat to their lovable dead ones with help of AI.

Having a healthy conversation with our loved ones pacifies the mood – we all may agree to that. May it be on social media, phone calls and in-person chat, all feels good. Technology intrudingly concerned in this matter by letting itself into our life, creating a medium for us to speak to our late lovable ones. Howbeit, will that it be ethical to talk with dead ones, as it pulls off a couple of detrimental points from the shelves.

What experts say about Deadbots? Will future pave the way for it? Let’s find…

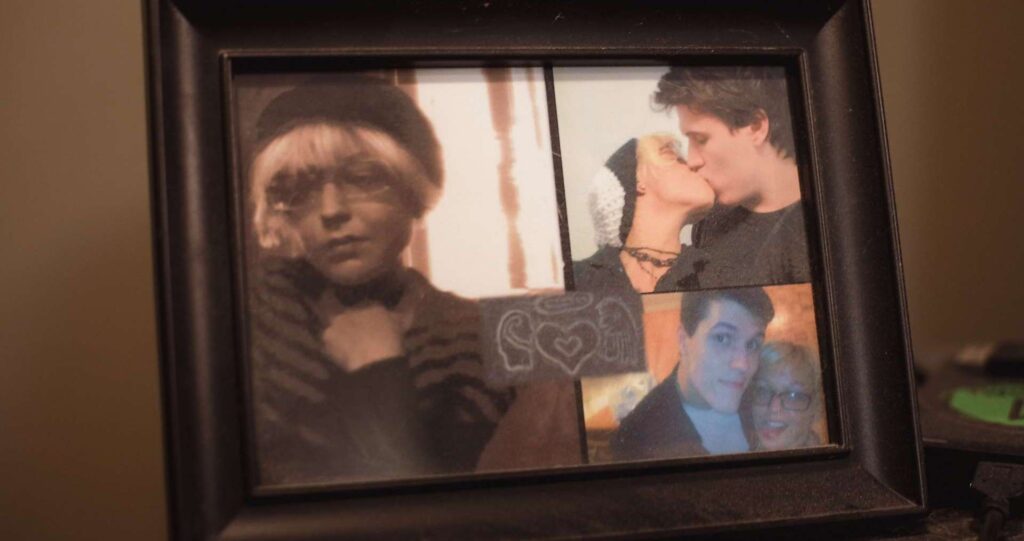

Deadbots for the first time, included in the talk of the decade, when Canadian man – Joshua Barbeau turned to an AI website – Project December to create an artificial intelligence simulation of Jessica Pereira, his late fiancée who died from a rare liver disease. Though Jessica died eight years back, Joshua was still glued to the agony of her decease which made him to develop a conversational robot to sound equally as his fiancée.

Deadbots using GPT-3

Project December – an initiative of the game-developer Jason Rohrer, wanted the platform to let people to customize chatbots with the personality they liked to interact with, for a cost. Project December was designed on an API of GPT-3, a text-generating language model by the artificial intelligence research company OpenAI, funded by Elon Musk. The bot wants to be fed with the chats of a person, to make itself acquire the quality and behavior of that person. The bot, as explained, would develop the accent or way of talk of the person and imitate the same with users, so that it feels as if the person itself is speaking.

Albeit, OpenAI was keen to not let people misuse the tech by constraining company’s guidelines explicitly to forbit the use of language for sexual, amorous, self-harm or bullying purposes.

Jason Rohrer shut down the GPT-3 version of Project December, calling OpenAI’s approach hyper-moralistic and stating that persons like Barbeau were ‘consenting adults’.

This incident rose concern on the topic, if its ethical to have deadbots and the impacts it would make to an individual and to the society.

What the Researcher say?

According to the author of this research, Sara Suarez-Gonzalo, the detrimental impacts of the deadbots on prediction may demoralize human nature and sometimes lead to squander the technology.

She points out,

○ Developing a humanistic chat-bot to resemble the talk of a person, without the consent of that person may bring privacy issues, as the AI algorithm needs data to get fed with, and here, the person’s social network data has to be revealed. Exploiting a dead’s messages in social media without their concern isn’t ethical though ‘dead’.

○ What if the consent is received? Even then, revealing the entire network of a deceased, may bring damages to their honor, reputation or dignity which in fact distress people close to them.

Related Posts

○ Finally, given the malleability and unpredictability of machine-learning systems, there is a risk that the consent provided by the person mimicked (while alive) does not mean much more than a blank check on its potential paths.

○ Also, it’s unclear of who will take the responsibility for the outcomes of a deadbot – especially in the case of harmful effects. The creator, the customer or the technology? Probably, the third one can’t be accused since it’s a tool.

Out of these prospects, deadbots not preferably fall as a technological innovation but an instrument with possibilities of profane acts.

Technology should go along with nature, not against!

Comment down your thoughts on this… Hope you find the page useful!

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).

Reference: TheConversation