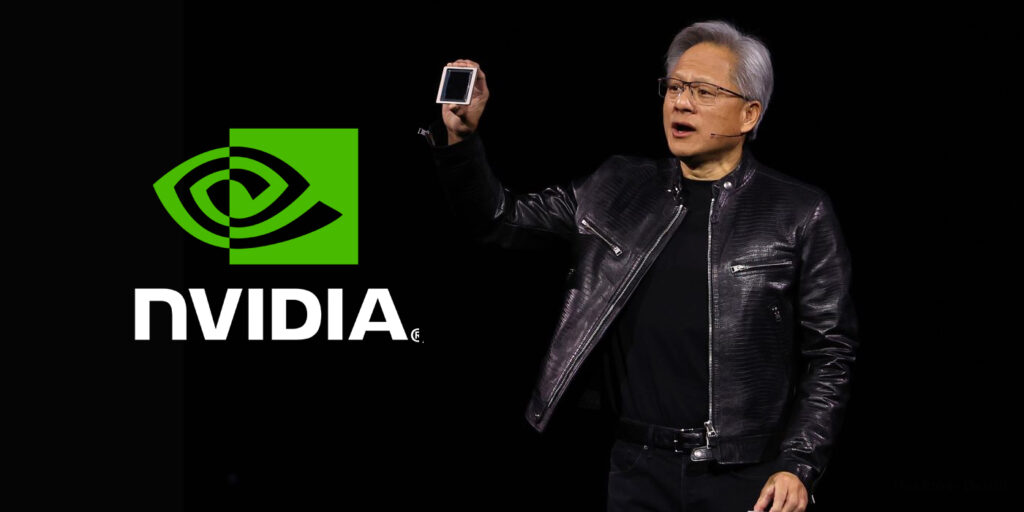

U.S based AI chip-making firm Nvidia unveils next-gen AI chips – Blackwell B200 GPU and GB200 superchip, which is set to be the world’s most powerful AI chip till date. The chips will be powering the new era of computing.

Organizations and governments around the world are optimistic on artificial intelligence, and wants to leverage the growth by incorporating AI into their systems. The deployment of AI chips enables organizations to build and run real-time large language models (backbone of AI) in their systems, allowing it to do complex processes, which normal chips can’t do.

The AI-chip maker Nvidia had already rolled out an H100 AI chip, which had made the firm a trillion-dollar company, valuing it more than Alphabet and Amazon. Now it unveils two new AI chips which are more efficient in cost and performance and consumes less energy than it did for H100.

To no surprise, companies have already queued up to incorporate Nvidia’s new AI chips – Blackwell B200 GPU and GB200, where GB200 is nothing but connecting two B200 chips.

What is Blackwell B200 GPU AI Chip?

Blackwell architecture of Nvidia presents this new B200 GPU chip with 208 billion transistors offering 20 petaflops of FP4 horsepower. Individual B200 GPU chips are coupled together to make one GB200 chip. The stacking of these GB200 chips builds up a whole unit, which Nvidia calls “GB200 NVL72”.

Nvidia GB200 NVL72 is a multi-node, liquid-cooled, rack-scale system for the most compute-intensive workloads, or in simple words, the ‘monster’ which could substantially make a difference in computing using AI. GB200 NVL72 is an aggregation of 36 GB200 chips, having 72 B200 GPUs and 36 Grace CPUs, interconnected by NVLink.

This Nvidia GB200 NVL72 drastically reduces cost and energy consumption by up to 25x compared to the same set up with H100 chips. Indeed, GB200 NVL72 provides up to a 30x performance increase compared to H100 chips. It has nearly two miles of cables inside and with 5000 individual cables. One of the racks can support a 27-trillion parameter model. For comparison, GPT-4 is said to have 1.76 trillion parameters.

Nvidia offers a HGX B200 server-board as well, which is like a motherboard aggregating all server components into one system. The HGX B200 links eight B200 GPUs through NVLink to support x86-based generative AI platforms.

How better it is than other AI chips?

GB200 NVL72 unit can offer 30 times the performance of the same equipped with H100 chips. The new AI chips reduces cost and energy consumption by up to 25x.

Training a 1.8 trillion parameter model can now be done with 2,000 Blackwell GPUs consuming four megawatts of power, which would have previously taken 8,000 Hopper GPUs and 15 megawatts of power.

Nvidia told journalists one of the key improvements is a second-gen transformer engine that doubles the compute, bandwidth, and model size by using four bits for each neuron instead of eight (thus, the 20 petaflops of FP4 I mentioned earlier).

A second key difference only comes when you link up huge numbers of these GPUs: a next-gen NVLink switch that lets 576 GPUs talk to each other, with 1.8 terabytes per second of bidirectional bandwidth.

| Parameter/Chip | Blackwell B200 GPU by Nvidia | Hopper H100 by Nvidia | Alveo U50 by AMD |

|---|---|---|---|

| Transistors | 208 billion | 80 billion | 50 billion |

| Memory | 1.4 TB | 640 GB | 8 GB |

| Price | $30,000 to $40,000 | $25,000 | $2965 to $3303 |

Supplying AI Chips to leading firms

AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to offer Blackwell-powered instances, as will NVIDIA Cloud Partner program companies Applied Digital, CoreWeave, Crusoe, IBM Cloud and Lambda. Sovereign AI clouds will also provide Blackwell-based cloud services and infrastructure, including Indosat Ooredoo Hutchinson, Nebius, Nexgen Cloud, Oracle EU Sovereign Cloud, the Oracle US, UK, and Australian Government Clouds, Scaleway, Singtel, Northern Data Group’s Taiga Cloud, Yotta Data Services’ Shakti Cloud and YTL Power International.

“GB200 will also be available on NVIDIA DGX™ Cloud, an AI platform co-engineered with leading cloud service providers that gives enterprise developers dedicated access to the infrastructure and software needed to build and deploy advanced generative AI models. AWS, Google Cloud and Oracle Cloud Infrastructure plan to host new NVIDIA Grace Blackwell-based instances later this year,” writes Nvidia.

(For more such interesting informational, technology and innovation stuffs, keep reading The Inner Detail).

Kindly add ‘The Inner Detail’ to your Google News Feed by following us!